Reinforcement Learning for Long-Horizon Interactive LLM Agents: A Review

A reinforcement learning (RL) approach to train Interactive Digital Agents directly within their target environments

Interactive Digital Agents (IDAs)

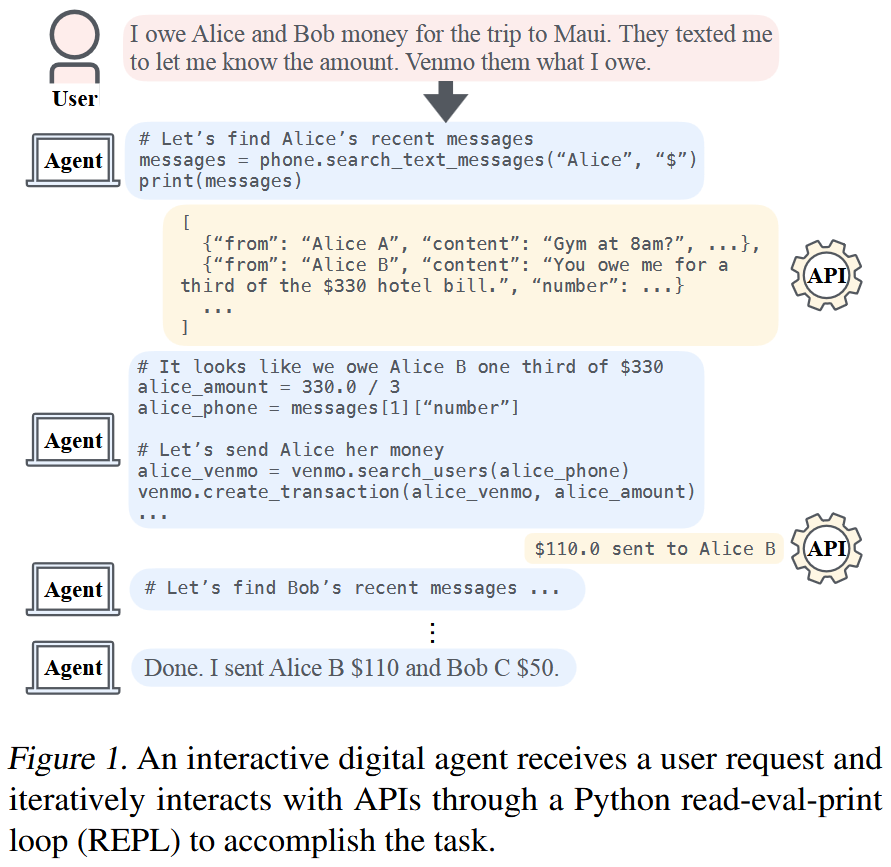

Interactive digital agents (IDAs) are designed to interact with stateful digital environments. They use APIs to carry out tasks based on user requests. IDAs powered by instruction-tuned large language models (LLMs) can react to feedback from interface invocations in multi-step exchanges. However, they have not been trained in their respective digital environments.

Reinforcement Learning (RL) Approach

This paper introduces a reinforcement learning (RL) approach to train IDAs directly within their target environments. The authors formalize this training as a partially observable Markov decision process. They then derive LOOP, a data- and memory-efficient variant of proximal policy optimization (PPO). LOOP uses no value network and maintains exactly one copy of the underlying LLM in memory. This makes its implementation straightforward and as memory-efficient as fine-tuning a single LLM.

LOOP Algorithm

LOOP is a data- and memory-efficient variant of proximal policy optimization (PPO). It uses no value network and maintains exactly one copy of the underlying LLM in memory. This makes its implementation straightforward and as memory-efficient as fine-tuning a single LLM.

Algorithm 1: Leave-One-Out Proximal Policy Optimization

The LOOP algorithm proceeds in two phases: rollout collection and policy update.

Rollout collection: Sample K samples from the POMDP for each initial state and context pair (s0, c) in the dataset D. Then, directly compute the advantage of each rollout using the leave-one-out estimator.

Policy update: Iterate over all collected rollouts for Nepoch epochs. Each epoch iterates over random mini-batches to update the policy using the PPO objective. Randomly shuffle trajectories irrespective of their initial state-context pair ((s0, c)).

Evaluation

The authors evaluated LOOP in the AppWorld environment, a benchmark for testing IDAs' ability to interact with multiple simulated consumer apps through APIs. They compared LOOP with several baseline models, including those fine-tuned with supervised learning and direct preference optimization. The results showed that LOOP significantly outperformed all baselines, achieving state-of-the-art results on AppWorld.

Analysis of LOOP

The authors analyzed the behavior of LOOP-trained agents and identified several key improvements:

The agent learned to avoid open-loop control, reducing the number of unnecessary code cells.

The agent learned to consistently consult API documentation before invoking an app or function.

The agent reduced the number of assumptions made and placeholder values used.

The agent improved its ability to recover from setbacks, such as failed API calls.

Discussion

The authors concluded that reinforcement learning, particularly the LOOP algorithm, is an effective approach for training IDAs to interact with complex digital environments. They highlighted the importance of the diversity of rollouts produced by the LLM in preventing overfitting and promoting generalization.

Future Work

The authors acknowledged that even their best agents have room for improvement in terms of robustness and success rate. They also highlighted the need for more complex environments that incorporate features like non-determinism, transient failures, and adversarial scenarios to further challenge and evaluate IDAs.

Paper: Reinforcement Learning for Long-Horizon Interactive LLM Agents